The allure of automation is surging across the oil and gas industry. Yet, even with its consistency, efficiency, safety, machine learning, and 24/7 productivity, achieving these benefits comes with an inherent risk/reward tipping point by transitioning critical compute technologies from pristine data centers into daunting field environments. That tipping point is downtime.

Any given oil rig uses standard laptops for general computing. If a laptop breaks or needs maintenance, it’s an inconvenience, but the downtime to replace or restore it isn’t detrimental to the rig’s overall performance or protections. These non-essential, “disposable” computers are purchased without much deliberation or effort.

On the flipside, there are high-reliability fielded compute functions—like drilling activities, production monitoring, and processing massive amounts of data for real-time decision making and system adjustments—that can cripple production, performance, profitability and safety if they fail.

For essential automation, AI, and machine learning compute operations, oil and gas companies must assess the big-picture risks if a system goes down. One miss can extend beyond obvious operational and financial hits due to drilling delays, gas leaks, oil spills, employee injuries, or even loss of life into long-term damage to the company’s reputation, customer relationships, the environment, and even future staffing. This is where quality standards, equipment decisions and preventative measures are no longer expenses. They are strategic investments.

Do more—and do it better—with less

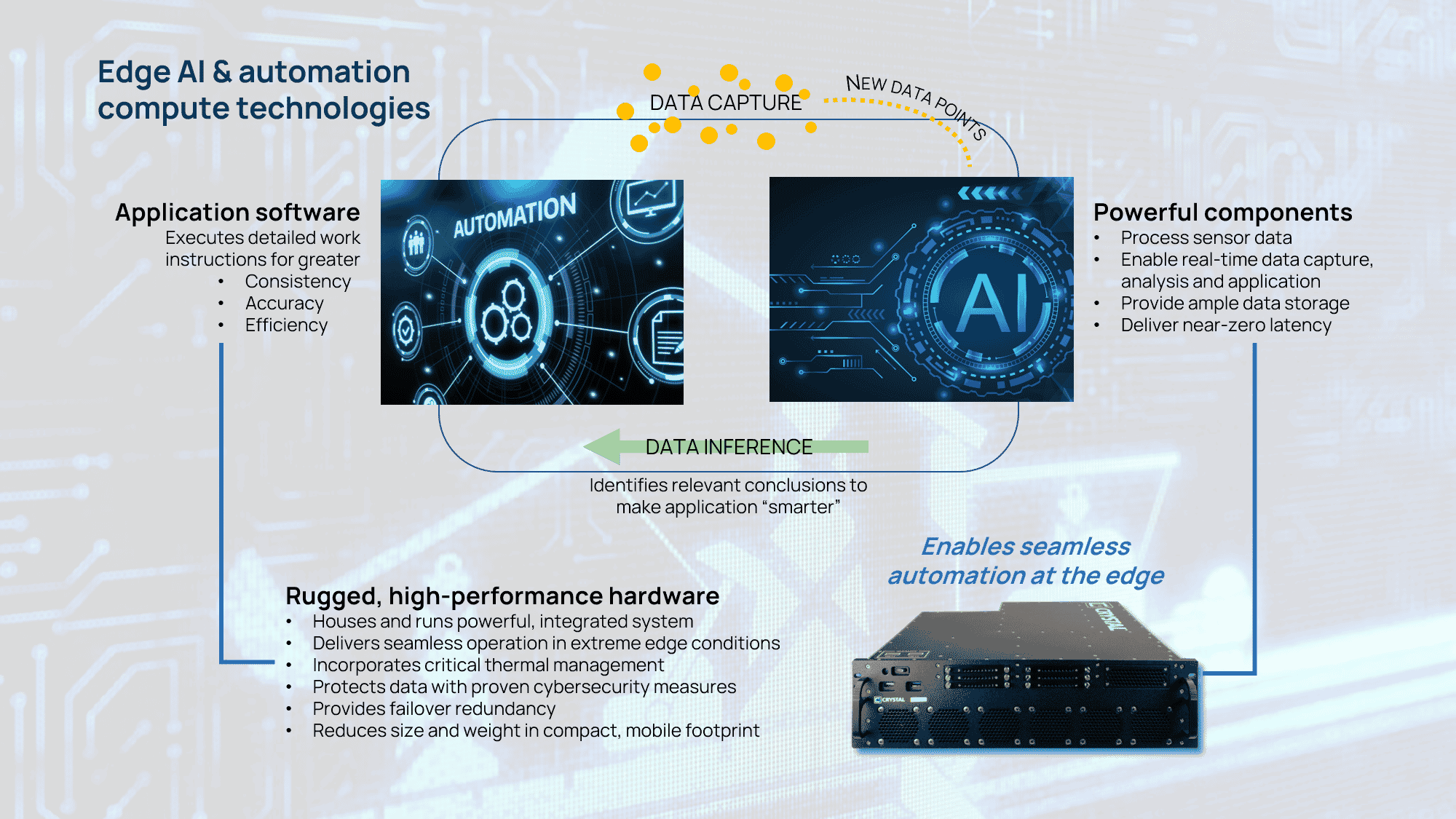

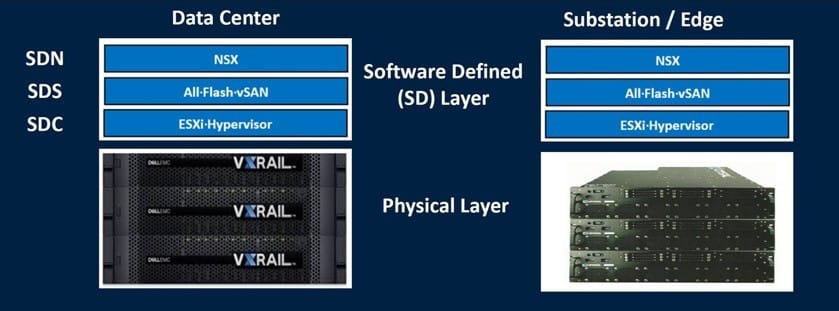

When taking critical compute functions to the edge, less is best, making virtualization a multi-tasking utopia. This versatile architecture consolidates multiple compute, storage and networking resources into a single, purpose-built package. Armed with the power, capacity, reliability and ultra-low latency to execute automation and AI algorithms, virtualization delivers consistent operations and real-time situational awareness for immediate decision making when time, accuracy and safety are paramount.

Along with shrinking the overall footprint into a compact, easy-to-deploy system, virtualization incorporates fail-over redundancy. In the event of a problem with the hardware or established security parameters, the system migrates operations automatically to other servers without any disruption.

Less physical hardware also reduces the initial financial investment and ongoing maintenance costs. In fact, real-time updates, recalibrations and control setpoint adjustments can be managed remotely with the push of a button, saving valuable time and resources. Over time, operational trend data helps establish preventative maintenance schedules and defines tailored operating modes for emergency situations to stay ahead of catastrophic equipment failures.

Respect the power of the unpredictable

Recently, a Fortune 500 upstream oil and gas leader admitted that the industrial server they’ve been using in the field for less than five years is failing. Why? It wasn’t designed to survive or function at maximum duty in remote locations for extended periods. Now, they’re in the position of replacing these servers with new equipment.

Anyone who’s been on the front lines of oil and gas exploration or extraction knows the brutal, highly unpredictable nature of these environments. This work is not for the faint of heart, nor is it for traditional off-the-shelf computer hardware. From unpredictable weather conditions to harsh environmental factors, like shock and vibration, salt fog, dust, and caustic chemicals, these variables wreak havoc on standard commercial units, which causes…downtime.

Taking high-performance compute solutions out of climate-controlled data centers to the edge requires specific ruggedization design elements and manufacturing techniques. Without this strong foundation, even the best software and hardware components become irrelevant if the hardware appliance isn’t developed and built specifically for the heightened demands of edge environments. This has been our sole focus since introducing the first military-grade rugged server in 2006.

Our proficiency in providing rugged, high-performance computer hardware enables successful execution of front-line applications. This includes automated oil and gas field operations in well drilling, completions and production, mobile fracking command centers, and monitoring solutions for real-time gas leak detection. The reliability ensures the backend control system and monitoring software operate effectively and throughout well development and completion.

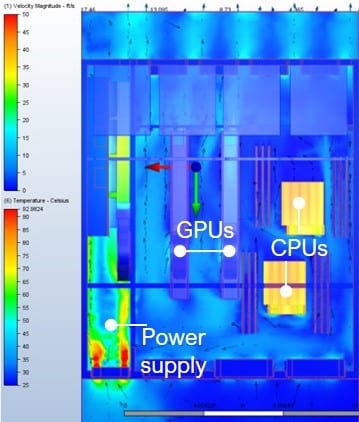

As experts in thermal management, we consistently solve perplexing heat-generation challenges. From custom heatsinks to liquid, fan, and fanless sealed-chassis cooling techniques, every solution optimizes the unit’s thermal performance to keep critical components running smoothly while preventing computer hardware from throttling.

Integrating rugged chassis, innovative thermal strategies, and leading AI and autonomy software and components from partners like NVIDIA, Intel, VMware, Advantech and CommScope, not only executes the desired application with flawless precision, it ensures all the pieces fit and work together to maximize performance and minimize downtime.

It’s all a matter of perspective

Risk is an inherent element of any business decision or approach. Determining risk tolerance levels relative to strategic and operational priorities designates the difference between expenses and investments.

While healthy margins and ample cash flow are coveted outcomes to fuel ongoing success and growth, compromising on critical operation enablers—like edge compute solutions—based on price over performance can shatter any positive momentum with a single failure.

Is that a risk you’re willing to take?

Original article posted in E+P Magazine.